Always Be Curious #246: HBM explained, SK hynix is flying, and solar backpacks

This week in ABC: A closer look at High Bandwidth Memory, South Korean chipmaker SK hynix posts record earnings, and solar backpacks supply light after dark in Tanzania

This week, South Korean memory giant SK hynix reported record earnings, fueled by the AI-driven demand for high-bandwidth memory (HBM). In just a few years, the HBM side of its business has shown crazy growth, now making up more than 40% of the total DRAM revenue. This trend has completely altered SK hynix’s financial situation and outlook. As their CFO Kim Woo-Hyun put it: “With a significantly increased portion of high value-added products, SK hynix has built fundamentals to achieve sustainable revenues and profits even in times of market correction.” It’s remarkable to see this trending memory technology bring such powerful growth, so this week, I wrote the following explainer to take a closer look at HBM. 🔎✨ Read on, my dear Curious Clan! 👇

Oh, and if you get value out of my writing, then please do share this explainer with your own network and on social media. Or better yet, support ABC with a paid subscription! Thank you. 🙏

Some basics: the OG of computing, Von Neumann

Traditional computing has been built on the ideas of John von Neumann, a computer pioneer at Princeton University in the 1940s. In his computing architecture, there’s a clear separation between the central processing unit (CPU), memory storage, and the data bus that connects them. The CPU performs logical computations, while the working memory (Dynamic Random Access Memory or DRAM) holds both the program instructions and the data needed by those instructions.

However, the data bus between the CPU and memory can only transport a limited amount of data at a time. As computers and software became more advanced over time, the CPU's ability to process data increased much faster than the ability to move data in and out of memory. This discrepancy created the (you guessed it) “von Neumann bottleneck”. Simply put, it slows down computation because the processor often has to wait for data to be delivered from memory. Lots of innovations that you might catch in the news, like advanced packaging and High Bandwidth Memory—they help to address this particular challenge.

So DRAM’s not cutting it?

Not that simple! After all these years, DRAM memory is still the go-to solution for working memory. Innovations have mostly focused increasing memory cell capacity (with new manufacturing processes) and on increasing the bandwidth (how fast data can move in and out of the DRAM). Today’s computers use a faster DRAM memory class called “DDR” (Double Data Rate). For example, your desktop PC likely has DDR5 in there, your tablet might have the more energy-efficient Low-Power Double Data Rate (LPDDR), and computationally intensive applications like gaming, automotive computation and some AI applications harness GDDR (Graphics Double Data Rate). Think of DRAM memory bandwidth as a stream of water. DDR is like a faucet. It’s a narrow stream, but it flows fast.🚰

Of the various types, GDDR has the fastest clock speeds (so it delivers the fastest stream), but this also means more power consumption and heat generation, which limits just how scalable it is. In particular, these memory solutions run into limitations when scaling up for the absolutely humongous workloads in generative AI.

And this is where HBM comes in. 💪

High Bandwidth Memory has entered the chat 👀

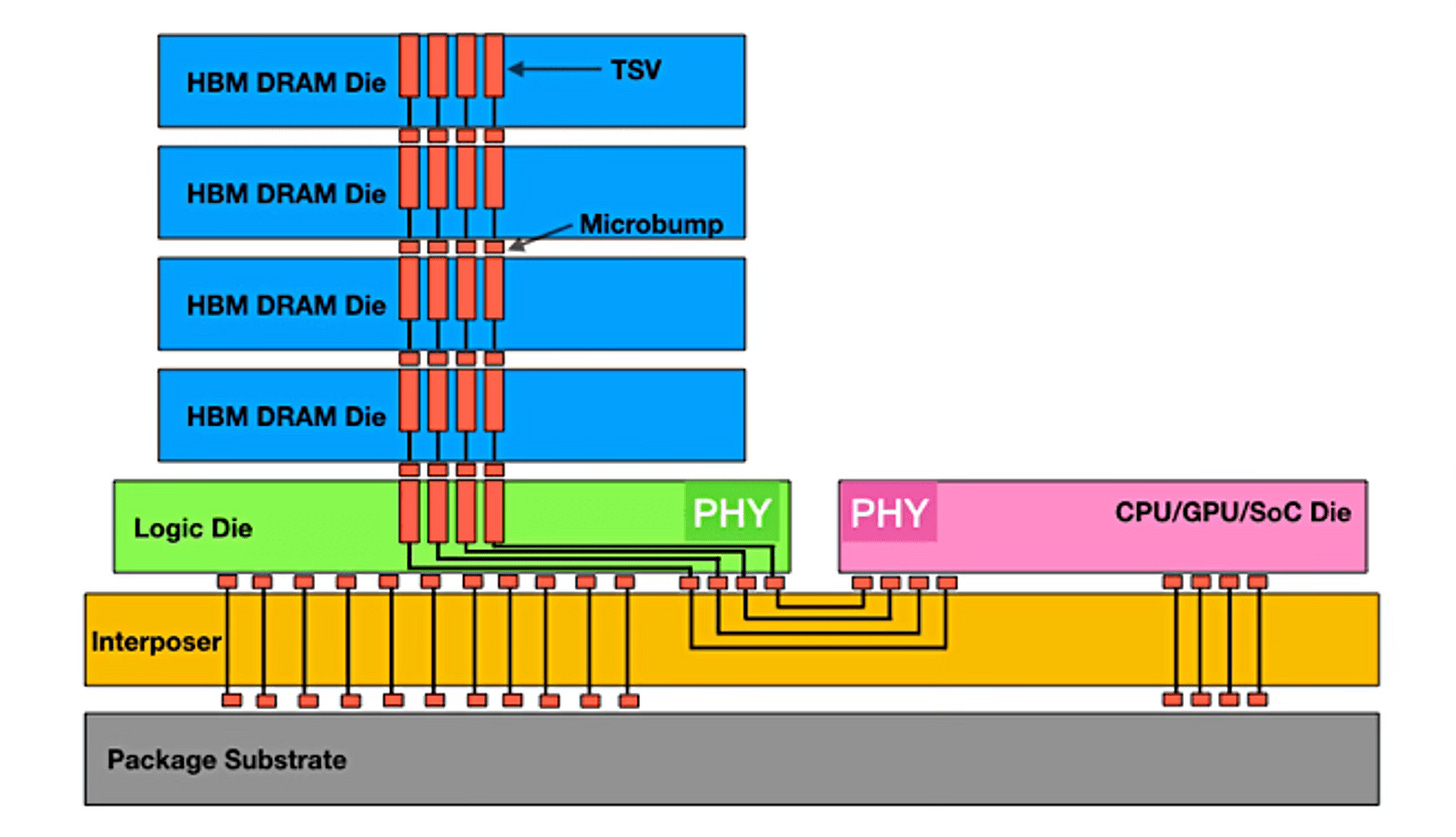

HBM isn’t a new type of memory cell. Instead, it’s an industry standard on how to harness multiple DRAM memory chips to work together with logic chips. HBM vertically stacks multiple layers of memory chips on top of each other and then connects them with each other, before connecting them to the logic chip. Sounds pretty straight-forward. It’s not. 😬 A ton of innovation had to happen to make this work. To name a few key innovations:

TSVs (Through-Silicon Vias): Tiny vertical channels that allow data to move quickly between stacked memory layers.

Microbumps: Special connections between memory and processors to improve speed and reduce power loss.

Silicon interposer: A special layer inside advanced chips that basically acts like a sort of internal printed circuit board or ‘motherboard’, connecting various chips to work together.

The work on these innovations started halfway through the 2000s, with AMD and (you guessed it!) SK hynix leading the way. The first HBM product was introduced in 2015 (AMD’s Radeon R9 Fury GPU), kickstarting an absolute niche of just a few percent of the DRAM market. But since then, the HBM market has grown fast, piggybacking on the rise of AI. 📊

The reason for this is that HBM delivers next-level bandwidth. The industry is currently mass producing HBM3 and developing next-gen HBM4, which continue to stack memory and boost bandwidth. To compare it to our stream of water: instead of a narrow stream for bandwidth, HBM uses a very wide memory bus and operates at lower clock speeds, essentially delivering a slower but much wider stream of water. 🚿 This design moves more data with less energy, making it much more power-efficient—ideal for the most power-hungry AI systems. 💡

Market dynamics (2025-2030s)

The AI boom has completely changed the memory market, with the HBM segment poised to expand at an average of 42% a year over the coming decade. 📈 Currently, SK hynix leads the market with an above 50% market share, followed by Samsung with around 40%, while Micron comes in third with around 10%. But as the HBM market will grow from around $4 billion in 2023 to over $130 billion by 2033 (!), there’s plenty of rivalry, opportunity and ground to be gained. However, experts generally believe that SK Hynix will its leading position because of its first-mover advantage and deep technology expertise. 🏆

To give you a sense of the heated competition: Micron got its foot in the door with AI chip champion NVIDIA, supplying 8-layer HBM3E chips. But its share remains small compared to SK Hynix, leading with 12-layer HBM3E chips. 💪 SK hynix was also the first to launch a 16-layer HBM3E (November 2024), but Micron is reportedly doing final testing on its own 16-layer HBM3E chips, with mass production just around the corner. 🔥

The next-gen HBM4 is expected to be introduced in 2026 and contribute to market revenue meaningfully by 2027. This generation may mean even more competitive edge from Micron and Samsung to leading SK hynix, because new manufacturing processes will allow for more differentiated approaches to improve how HBM and GPUs work together. 🏗️🚧

SK hynix is currently developing a 12-layer HBM4, which is expected to become its flagship product from 2026 onwards, with the 16-layer HBM4 following from the second half of 2026. Micron’s HBM4 is expected to enter mass production in 2026, followed by HBM4E in 2027-2028. In an effort to beat the pack, Samsung has accelerated its HBM4 memory development, aiming to start production by late 2025—shaving six months off its original timeline. This is how it hopes to make inroads with the key AI companies.

So, you see, the HBM battle is on. 🥊 I hope this explainer gave you a good sense of the technology and the dynamics at play. 🙏 AI workloads involve processing massive datasets and running complex algorithms, which require lots of fast, efficient memory. HBM offers the bandwidth and capacity needed without guzzling power, making it the go-to memory for AI accelerators right now…and in the coming decade. 🙌

Have a good week, stay safe and sound,

👨💻The round-up in sci-tech💡

🇺🇸 Trump announces $100 billion AI initiative (The New York Times) 🎁

OpenAI, Oracle and SoftBank formed a new joint venture called Stargate to invest in data centers, building on major U.S. investments in the technology.

🔮 Davos panel - The dawn of AGI? (World Economic Forum)

The Atlantic’s Nicholas Thompson (arguably one of the best tech reporters out there) hosted a remarkable panel discussion about Artificial General Intelligence with experts Andrew Ng, Yejin Choi, Jonathan Ross, Yoshua Bengio, and Thomas Wolf. They discussed how much of a risk will society face when and if if AGI is created, the differences between human and machine intelligence, how to embed ethical and pluralistic values into AI, how much the recent breakthroughs by Deepseek mean, and whether AI is headed in a direction of benevolence--or the opposite.

🔮 Anthropic chief says AI could surpass “almost all humans at almost everything” shortly after 2027 (Ars Technica)

Amodei: “I think progress really is as fast as people think it is.”…

☀️ China’s ‘artificial sun’ sets nuclear fusion record, runs 1,006 seconds at 180 million °F (Charming Science)

China's "artificial sun," officially known as the Experimental Advanced Superconducting Tokamak (EAST), has achieved a groundbreaking milestone in fusion, running for 18 minutes straight at 100 millions degrees Celsius. 🤯

👑 The tech oligarchy arrives (The Atlantic)

Donald Trump’s inauguration signaled a new alliance—for now—with some of the world’s wealthiest men.

💚 Twelve dudes and a hype tunnel: scenes from the ‘Super Bowl for Excel nerds’ (The New York Times)🎁

At the Microsoft Excel World Championship in Las Vegas, there was stardust in the air as 12 finance guys vied to be crowned the world’s best spreadsheeter.

“You’d never see this with Google Sheets. You’d never get this level of passion.”

🛰️ A spy satellite you’ve never heard of helped win the Cold War (IEEE Spectrum)

Engineers at the Naval Research Lab launched a spy satellite program called Parcae and revolutionized signals intelligence at the height of the Cold War. The program relied on computers to sift through intelligence data, providing a technological edge at a pivotal moment in the Cold War.

🤓This week in chips⚠

🇰🇷💰 SK hynix becomes most profitable Korean company in Q4 2024 (Korea Times)

SK hynix posted multiple record highs in its 2024 earnings, boosted by the robust sales of its high-bandwidth memory (HBM) chips tailored specifically for artificial intelligence (AI) computing.

📈 Riding high on AI boom, SK hynix beats earnings estimates (Korea Herald)

Buoyed by record sales and profits, SK hynix logged its best-ever yearly earnings in 2024, successfully capitalizing on the global AI chip boom with its cutting-edge high bandwidth memory products.

🚀 SK Hynix profit surpasses Samsung, but shares dip on lower memory chip demand (South China Morning Post)

The company said supply will remain tight for high-performance chips, but demand declines will accelerate for legacy products.

🤓 The AI boom is giving rise to "GPU-as-a-Service” (IEEE Spectrum)

The industry harvests idle compute for AI startups that need it.

🇹🇼 TSMC reportedly building two more CoWoS facilities, debunking order cut rumors (Trendforce)

Construction at the STSP Phase III site is expected to begin as early as March 2025, and the two new facilities are projected to be completed by April 2026, with equipment installation likely commencing shortly thereafter, as the report notes.

🎧 Tech Unheard Episode 3: Chris Miller (Arm)

Arm CEO Rene Haas talks with Chris Miller, author of 'Chip War: The Fight for the World’s Most Critical Technology' about the past, present, and future of the semiconductor industry.

✨ Lightmatter’s optical interposers could start speeding up AI in 2025 (IEEE Spectrum)

Lightmatter uses light signals inside a processor's package.

🤪 China's second-largest foundry hires former Intel executive to lead advanced node development (Tom’s Hardware)

The Intel veteran will focus on advanced logic production.

📈By the numbers📉

🇰🇷 SK hynix announces Q4 and full-year 2024 financial results (SK hynix)

“Achieving best-ever quarterly and yearly performance with increased sales of AI memory products including HBM and eSSD. Company to affirm the possibility of achieving sustainable profit through differentiation of AI product competitiveness and profitability-oriented operation.”

🇺🇸 TI reports Q4 2024 and 2024 financial results (Texas Instruments)

"Revenue decreased 3% sequentially and 2% from the same quarter a year ago. Our cash flow from operations of $6.3 billion for the trailing 12 months again underscored the strength of our business model, the quality of our product portfolio and the benefit of 300mm production.”

📄 Contrary’s Tech Trends 2025 Report (Contrary)

Must-read report with plenty of interesting nuggets and insights on emerging tech!

❤️For the love of tech❤️

🎒 Solar-charging backpacks are helping children in rural Tanzania to read after dark (CNN)

Soma Bags makes backpacks equipped with solar panels that power a light, enabling children in rural Tanzania to read even when the sun goes down.

Always Be Curious is the personal newsletter of Sander Hofman, Senior Creative Content Strategist at ASML. Opinions expressed in this curated newsletter are my own and do not necessarily reflect those of my employer.

Clear, well written! On comment: John von Neumann was not just a computer pioneer. He is considered one of the greatest mathematicians of modern history and made great contributions to fundamental physics as well.

If the AI workload shifts significantly from training to inference what effect does that have on memory demand ?